So often I've seen this fallacy that just because something is Open Source, that it makes it more secure. Don't get me wrong, I'm a huge fan and believer in Open Source. It has become more and more a part of our every day lives, and has changed the world for better. But this whole notion that just because the source code is publicly available, that it's going to make the software more secure is incorrect. Commercial software also still holds value as well.

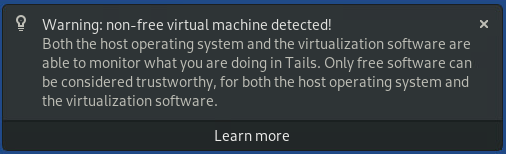

This whole post initially started when I was trying out a new OS:

To me this just screamed FUD. By "free", they were likely referring to Open Source (often the 2 are used interchangeably, when they are still not the same thing). You can read their post about this further on their site: https://tails.boum.org/doc/about/trust/index.en.html#free_software. I will leave readers to make draw their own conclusions from it.

But, back to the point at hand.

Volume

The first hurdle to this perception is the sheer volume of code. In Sonatype's 2019 State of the Software Supply Chain there are now 21 448 new open source releases, per day! Now to have a human to manually inspect all the code simply is not going to happen, period. The sheer volume is mind boggling (and likely only going to get worse). The only approach which will help to is automation. But that alone will not solve the problem. Attackers are extremely clever and I have no doubt that they will easily find ways around that (they already have). It becomes the classic cat and mouse game of the good guys vs the bad guys.

Lack of Investment

Open Source projects are often run by an individual or small teams with little to no resourcing. So expecting them to review all code and commits, again is not going to happen. Even if this is some how crowd sourced, again this isn't going to scale well. Some developer working on a library in their spare time is certainly not going to have every line of their code reviewed, if at all.

Maintenance

Also if something is identified, what happens if the developer doesn't fix it? There could be several reasons for this. They either may no longer support the project for whatever reason, they disagree with the finding, and so on. It's all very well identifying a flaw, but the risk does not go away until it has been fixed. Also how well is the finding publicized, so those who do use the software know to update it?

Examples

One example I've seen, "we trust open source since backdoors can't be injected into the code". Well I've got news, attackers are not even bothering about the code. They are instead inject their backdoors into the binaries which user download. Such example includes a Ruby library which checked the strength of a password (fantastic for a backdoor). The attackers did not modify the code, instead the attackers modified the binary of the library and uploaded it to the RubyGems repository. Another example, again a Ruby library, was only found by chance, again the code was not modify but rather binary of the library was. A final example is the Docker Alpine image (which is a popular Docker image used by many other Docker images). It was found to have no root password, which was initially fixed but that fix was then undone, and went for a period of 3 and a half years without a root password.

Commercial

I've not mentioned much about how commercial software may change this. But in some aspects it does. Firstly, by its very nature, you typically have to pay for commercial software. So this already helps some what to address the point about lack of funding (it won't completely eliminate it, but they are in a far better position). Along with this, this funding can help finance things such as tooling, especially Source Code Analyzers (SCA) such as Veracode. Also there could be contractual obligations in place. So this also means that if an organization is not acting in good faith, they could potentially face a legal lawsuit. Businesses are often in a place to make money, and while they do take risks, I find it hard to believe they would take extremely unnecessary risks in things such as backdoors. If found out, it could potentially lead to the end of the company. Why if I'm already paying money for say, a HyperVisor to run my VMs, would the company then inject backdoors into it? Sure they could attempt to sell my details on, but is that really worth the risk to them?

Conclusion

So while it may seem obvious that just because you are able to view the code, that it will make it more secure, it's not quite the case in most cases. Yes you can view the code, but you will not be able to view all the code. And in any case, sometimes the bad bit is not even put into the code. To me this is very much like an insider threat, it is VERY difficult to catch and spot looking at individuals and their own actions. Instead we should instead be monitoring their behavior. See someone coming into the weekends, downloading a huge amount of data? That should raise a red flag immediately (as opposed to monitoring all data which the user downloads). Similarly with software, see an application making a call to something which you wouldn't expect it to? Flag it! Also make sure that you have the appropriate fundamentals in place:

- Least privileges

- Monitoring, especially of violations

- Automate where you can

When you start doing these things, it doesn't matter if the software is open source or closed. You not reviewing the internals, but rather the behavior, and personally I feel that's going to provide much better protection.

In summary, while initially it may seem like a black and white issue, it is far from it. There are so many different variables to take into account, which is why it's far from a case of saying Open Source = more secure. In some cases, yes. In others, no.